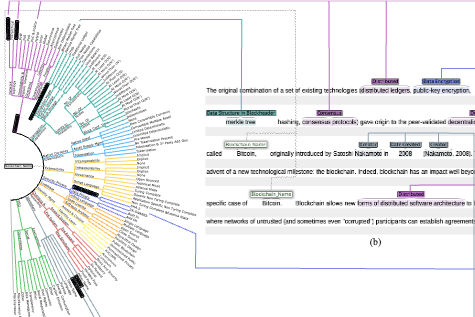

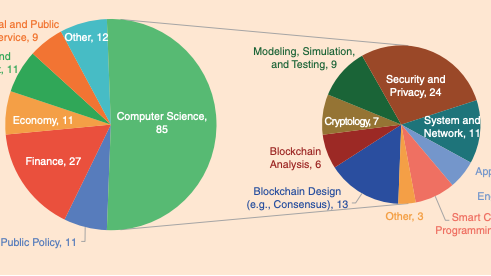

Hernandez, W., Tylinski, K., Moore, A., Roche, N., Vadgama, N., Treiblmaier, H., Shangguan, J., Tasca, P., & Xu, J. (2025). Evolution of ESG-focused DLT Research: An NLP Analysis of the Literature. Quantitative Science Studies. https://arxiv.org/abs/2308.12420

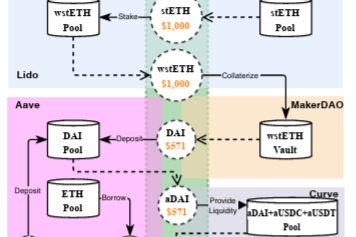

Luo, Y., Feng, Y., Xu, J., & Tasca, P. (2025). Piercing the Veil of TVL: DeFi Reappraised. International Conference on Financial Cryptography and Data Security (FC). https://fc25.ifca.ai/preproceedings/94.pdf

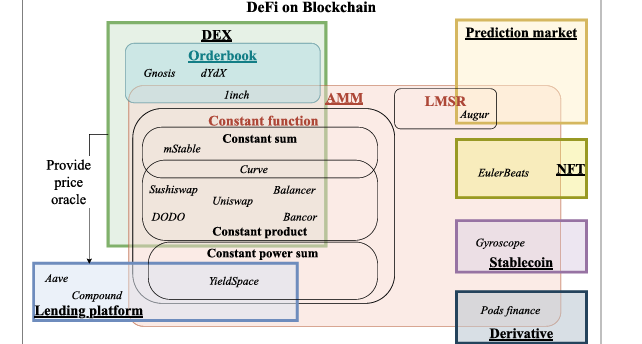

Cruz, W. H., Dahi, F., Feng, Y., Xu, J., Malhotra, A., & Tasca, P. (2025). AMM-based DEX on the XRP Ledger. International Conference on Blockchain and Cryptocurrency (ICBC). https://arxiv.org/abs/2312.13749

Ibañez, J. I., Bayer, C. N., Tasca, P., & Xu, J. (2025). Triple-Entry Accounting, Blockchain, and Next of Kin: Towards a Standardisation of Ledger Terminology. In Digital Assets: Pricing, Allocation and Regulation (pp. 198–226). Cambridge University Press. https://doi.org/10.1017/9781009362290.012

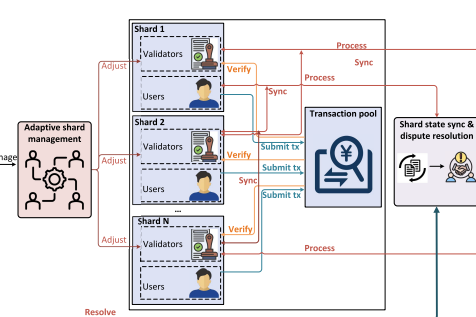

Liu, A., Chen, J., He, K., Du, R., Xu, J., Wu, C., Feng, Y., Li, T., & Ma, J. (2025). DynaShard: Secure and Adaptive Blockchain Sharding Protocol With Hybrid Consensus and Dynamic Shard Management. IEEE Internet of Things Journal, 12(5), 5462–5475. https://doi.org/10.1109/JIOT.2024.3490036

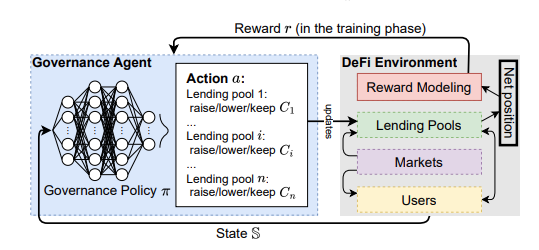

Xu, J., Feng, Y., Perez, D., & Livshits, B. (2025). Auto.gov: Learning-based Governance for Decentralized Finance (DeFi). IEEE Transactions on Services Computing, 1–14. https://doi.org/10.1109/TSC.2025.3553700

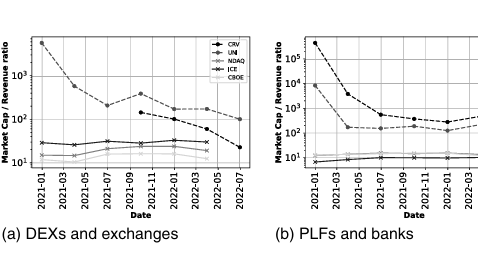

Xu, T. A., Xu, J., & Lommers, K. (2025). DeFi versus TradFi Valuation: Using Multiples and Discounted Cash Flows. In R. Aggarwal & P. Tasca (Eds.), Digital Assets (pp. 44–68). Cambridge University Press. https://doi.org/10.1017/9781009362290.004

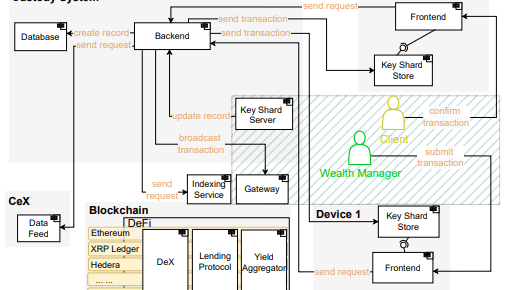

Erinle, Y., Feng, Y., Xu, J., Vadgama, N., & Tasca, P. (2024). Shared-Custodial Wallet for Multi-Party Crypto-Asset Management. Future Internet, 17(1), 7. https://doi.org/10.3390/fi17010007

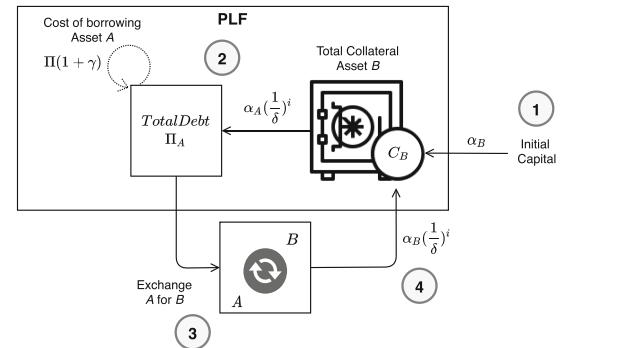

Arora, S., Li, Y., Feng, Y., & Xu, J. (2024). SecPLF: Secure Protocols for Loanable Funds against Oracle Manipulation Attacks. 19th ACM Asia Conference on Computer and Communications Security, 12, 1394–1405. https://doi.org/10.1145/3634737.3637681

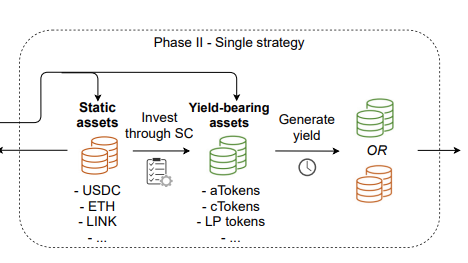

Xu, J., & Feng, Y. (2023). Reap the Harvest on Blockchain: A Survey of Yield Farming Protocols. IEEE Transactions on Network and Service Management, 20(1), 858–869. https://doi.org/10.1109/TNSM.2022.3222815

Ibañez, J. I., Bayer, C. N., Tasca, P., & Xu, J. (2023). REA, Triple-Entry Accounting and Blockchain: Converging Paths to Shared Ledger Systems. Journal of Risk and Financial Management, 16(9), 382. https://doi.org/10.3390/jrfm16090382

Xu, T. A., & Xu, J. (2023). A Short Survey on Business Models of Decentralized Finance (DeFi) Protocols. International Conference on Financial Cryptography and Data Security (FC), 197–206. https://doi.org/10.1007/978-3-031-32415-4_13

Xu, J., Paruch, K., Cousaert, S., & Feng, Y. (2023). SoK: Decentralized Exchanges (DEX) with Automated Market Maker (AMM) Protocols. ACM Computing Surveys, 55(11), 1–50. https://doi.org/10.1145/3570639

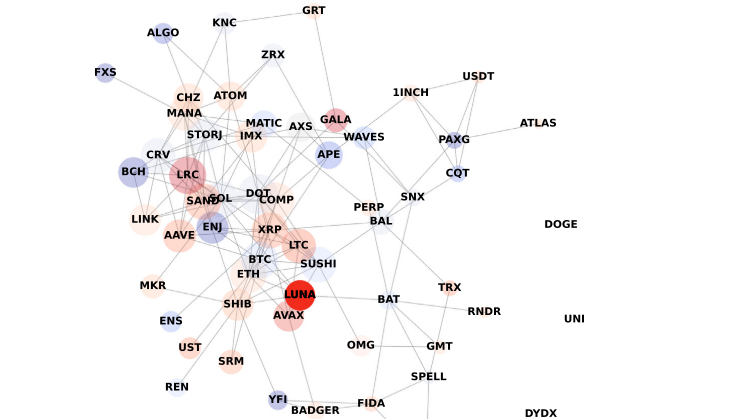

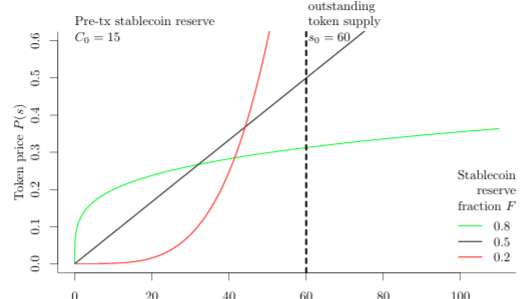

Briola, A., Vidal-Tomás, D., Wang, Y., & Aste, T. (2023). Anatomy of a Stablecoin’s failure: The Terra-Luna case. Finance Research Letters, 51(103358). https://doi.org/10.1016/J.FRL.2022.103358

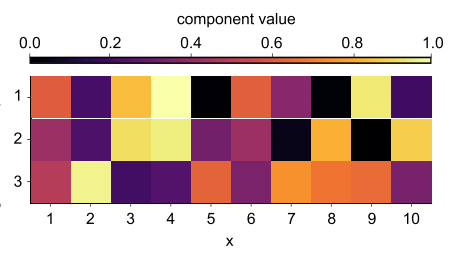

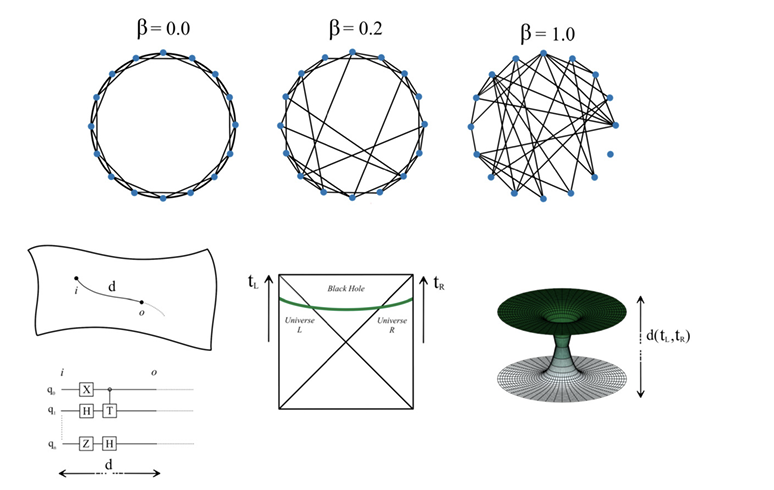

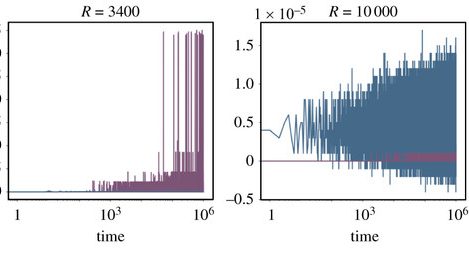

Di Antonio, G., Vinci, G. V., Pietronero, L., & Javarone, M. A. (2023). The rise of rationality in blockchain dynamics. New Journal of Physics, 25(123042). https://doi.org/10.1088/1367-2630/AD149A

Javarone, M. A. (2023). Complexity is a matter of distance. Physics Letters A, 479(128926). https://doi.org/10.1016/J.PHYSLETA.2023.128926

Alberto Javarone, M., di Antonio, G., Valerio Vinci, G., Cristodaro, R., Tessone, C. J., & Pietronero, L. (2023). Disorder unleashes panic in bitcoin dynamics. Journal of Physics: Complexity, 4(4). https://doi.org/10.1088/2632-072X/AD00F7

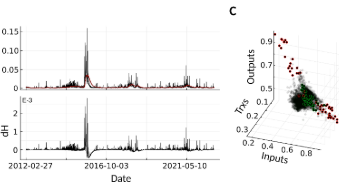

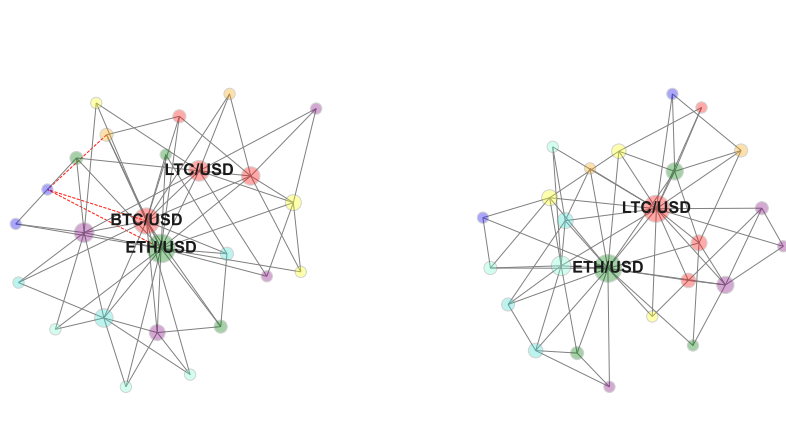

Briola, A., & Aste, T. (2022). Dependency Structures in Cryptocurrency Market from High to Low Frequency. Entropy 2022, 24(1548). https://doi.org/10.3390/E24111548

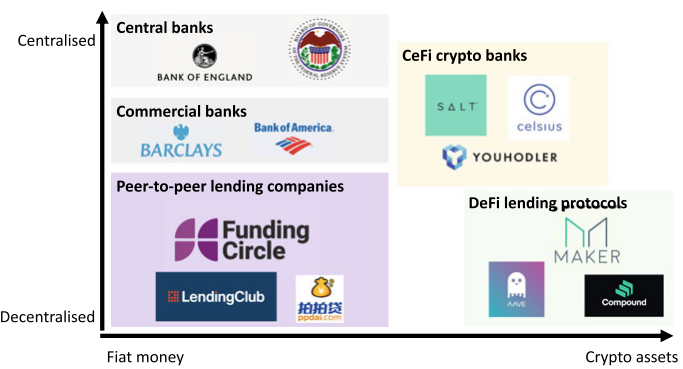

Xu, J., & Vadgama, N. (2022). From Banks to DeFi: the Evolution of the Lending Market. In N. Vadgama, J. Xu, & P. Tasca (Eds.), Enabling the Internet of Value (pp. 53–66). Springer. https://doi.org/10.1007/978-3-030-78184-2_6

Alberto Javarone, M., di Antonio, G., Valerio Vinci, G., Pietronero, L., & Gola, C. (2022). Evolutionary dynamics of sustainable blockchains. Royal Society A: Mathematical, Physical and Engineering Sciences, 478(2267). https://doi.org/10.1098/RSPA.2022.0642

Cousaert, S., Xu, J., & Matsui, T. (2022). SoK: Yield Aggregators in DeFi. International Conference on Blockchain and Cryptocurrency (ICBC), 1–14. https://doi.org/10.1109/ICBC54727.2022.9805523

Feng, Y., Xu, J., & Weymouth, L. (2022). University Blockchain Research Initiative (UBRI): Boosting blockchain education and research. IEEE Potentials, 41(6), 19–25. https://doi.org/10.1109/mpot.2022.3198929

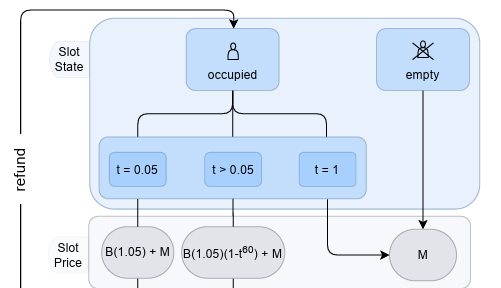

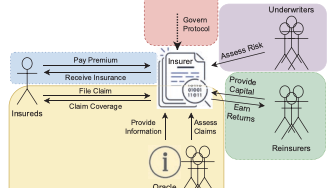

Cousaert, S., Vadgama, N., & Xu, J. (2022). Token-Based Insurance Solutions on Blockchain. In Blockchains and the Token Economy: Theory and Practice (pp. 237–260). Palgrave Macmillan. https://doi.org/10.1007/978-3-030-95108-5_9

Perez, D., Werner, S. M., Xu, J., & Livshits, B. (2021). Liquidations: DeFi on a Knife-edge. International Conference on Financial Cryptography and Data Security (FC), 457–476. https://doi.org/10.1007/978-3-662-64331-0_24

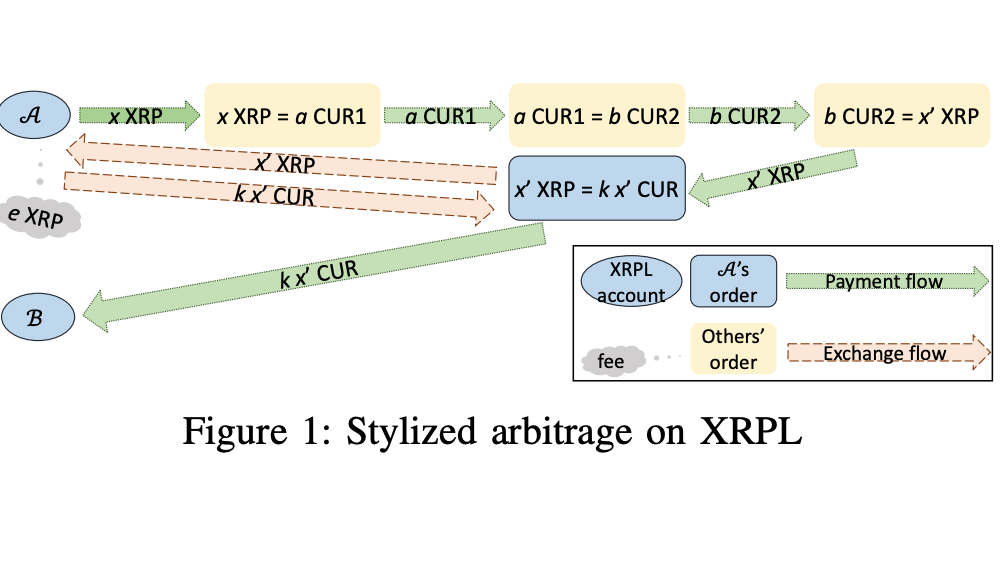

Peduzzi, G., James, J., & Xu, J. (2021). Jack the Rippler: Arbitrage on the Decentralized Exchange of the XRP Ledger. 3rd IEEE Conference on Blockchain Research & Applications for Innovative Networks and Services (BRAINS), 1–2. https://doi.org/10.1109/BRAINS52497.2021.9569833

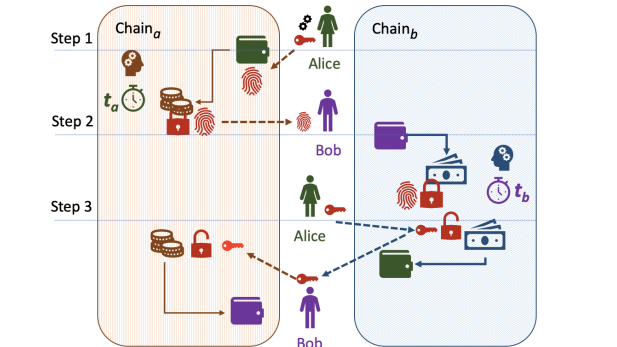

Xu, J., Ackerer, D., & Dubovitskaya, A. (2021). A Game-Theoretic Analysis of Cross-Chain Atomic Swaps with HTLCs. International Conference on Distributed Computing Systems (ICDCS), 584–594. https://doi.org/10.1109/ICDCS51616.2021.00062

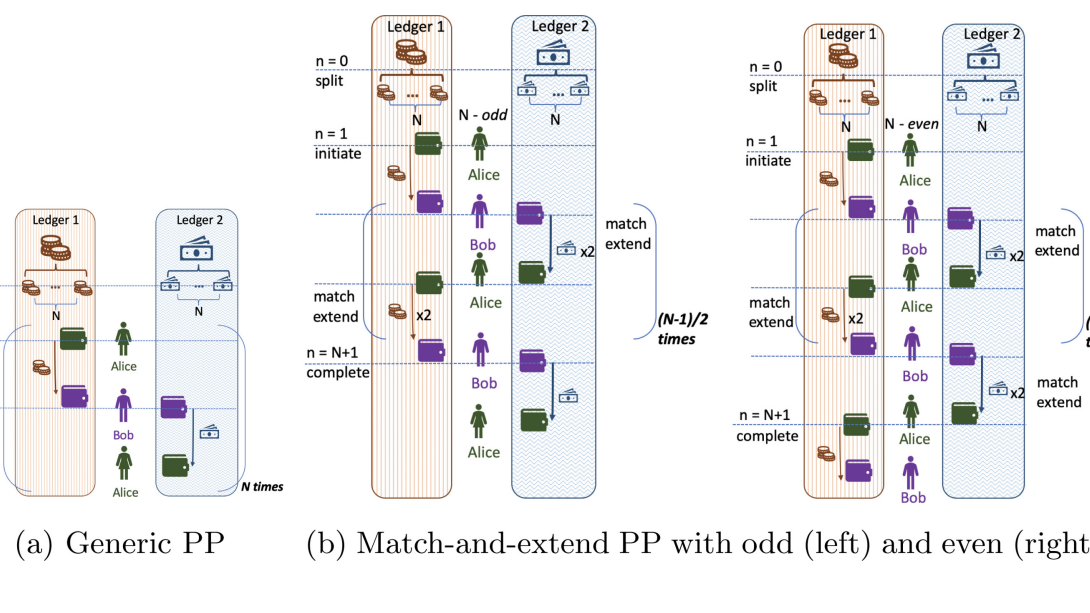

Dubovitskaya, A., Ackerer, D., & Xu, J. (2021). A Game-Theoretic Analysis of Cross-ledger Swaps with Packetized Payments. International Conference on Financial Cryptography and Data Security (FC), 177–187. https://doi.org/10.1007/978-3-662-63958-0_16

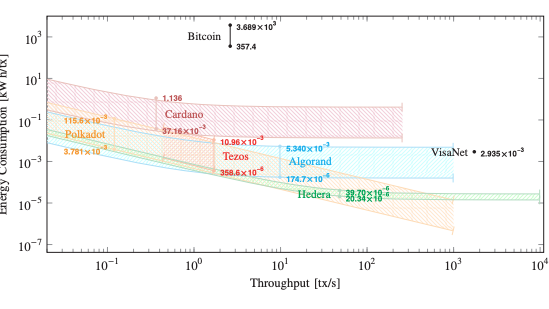

Platt, M., Sedlmeir, J., Platt, D., Xu, J., Tasca, P., Vadgama, N., & Ibanez, J. I. (2021). The Energy Footprint of Blockchain Consensus Mechanisms Beyond Proof-of-Work. 21st International Conference on Software Quality, Reliability and Security Companion (QRS-C), 1135–1144. https://doi.org/10.1109/QRS-C55045.2021.00168

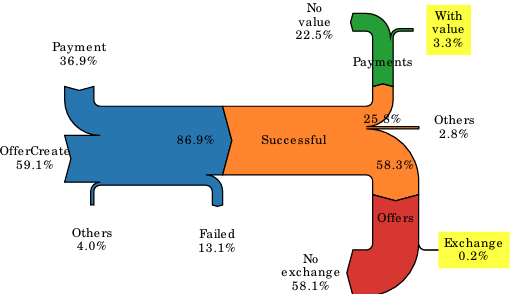

Perez, D., Xu, J., & Livshits, B. (2020). Revisiting Transactional Statistics of High-scalability Blockchains. Internet Measurement Conference (IMC), 16(20), 535–550. https://doi.org/10.1145/3419394.3423628

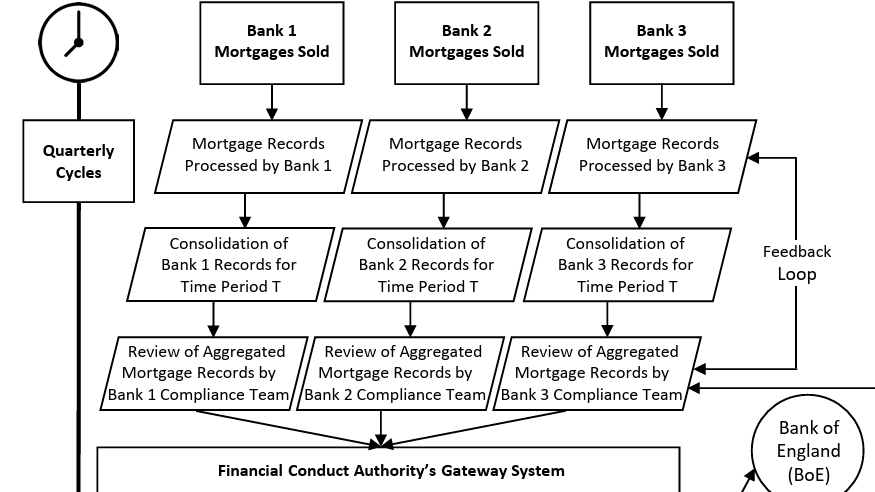

Gozman, D., Liebenau, J., & Aste, T. (2020). A Case Study of Using Blockchain Technology in Regulatory Technology. MIS Quarterly Executive, 19(1), 19–37. https://doi.org/10.17705/2MSQE.00023>

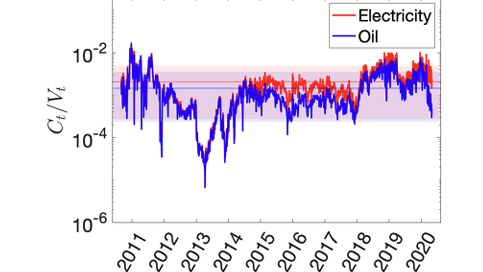

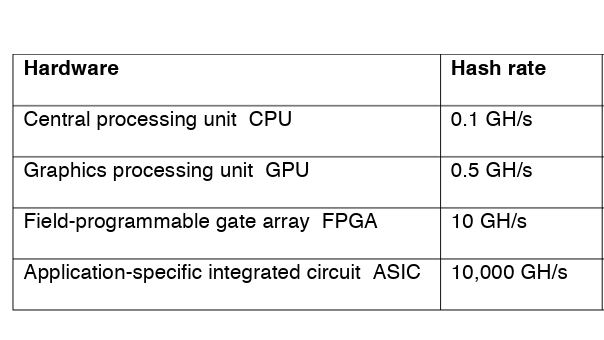

Song, Y. der, & Aste, T. (2020). The Cost of Bitcoin Mining Has Never Really Increased. Frontiers in Blockchain, 3. https://doi.org/10.3389/FBLOC.2020.565497/BIBTEX

Richardson, A., & Xu, J. (2020). Carbon Trading with Blockchain. In P. Pardalos, I. Kotsireas, Y. Guo, & W. Knottenbelt (Eds.), 2nd International Conference of Mathematical Research for Blockchain Economy (MARBLE) (pp. 105–124). https://doi.org/10.1007/978-3-030-53356-4_7

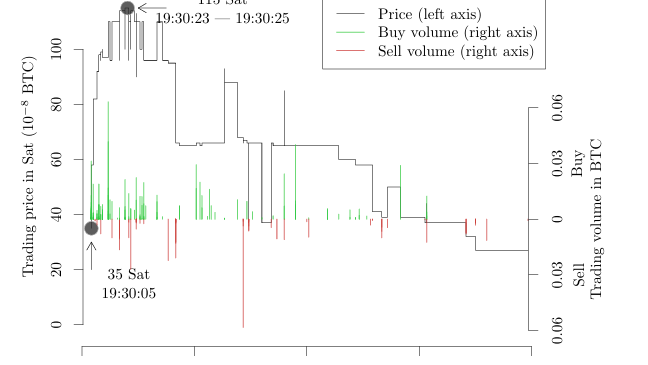

Xu, J., & Livshits, B. (2019). The anatomy of a cryptocurrency pump-and-dump scheme. 28th USENIX Security Symposium, 1609–1625. https://www.usenix.org/system/files/sec19-xu-jiahua_0.pdf

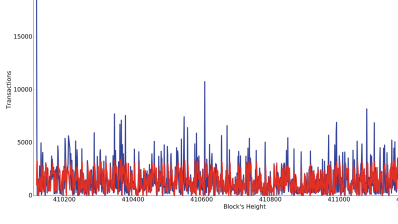

Pappalardo, G., Di Matteo, T., Caldarelli, G., & Aste, T. (2018). Blockchain inefficiency in the Bitcoin peers network. EPJ Data Science, 7(30), 1–13. https://doi.org/10.1140/EPJDS/S13688-018-0159-3

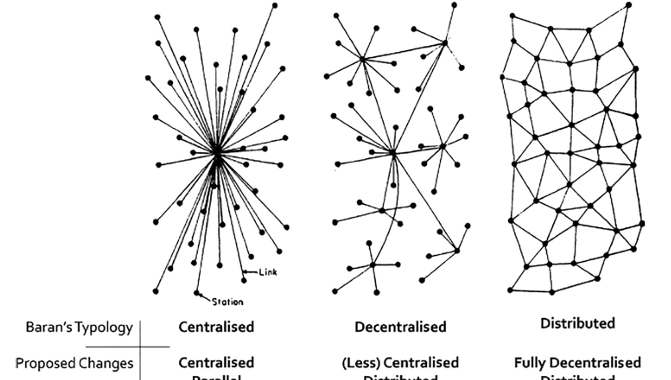

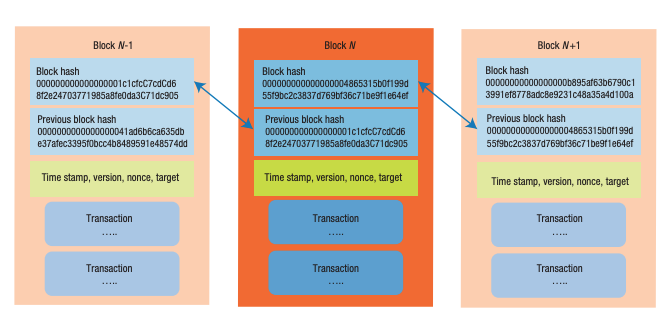

Aste, T., Tasca, P., & di Matteo, T. (2017). Blockchain Technologies: The Foreseeable Impact on Society and Industry. Computer, 50(9), 18–28. https://doi.org/10.1109/MC.2017.3571064

Aste, T. (2016). The Fair Cost of Bitcoin Proof of Work. SSRN Electronic Journal. https://doi.org/10.2139/SSRN.2801048